The Solar 10.7B model marks a new phase of development of artificial intelligence and natural language processing. This cutting-edge model launched by Upstage AI from South Korea, is incredibly advanced, offering 10.7 billion parameters and presents new ways to scale large language models both in terms of sizes and performance.

This article goes in-depth into Solar LLM 10.7B capabilities and how it is positioned relative to other competitively selected LLMs. Well, let’s start with getting acquainted with the solar-powered language models out there.

Hire senior LLM Developers vetted for technical and soft skills from a global talent network →

What is Solar 10.7B?

In other words, it is an enhanced language learning tool. With a whopping 10.7 billion parameters (imagine them as small knowledge bits), Solar 10.7B has been trained on huge amounts of text data. This makes it possible for it to interpret, comprehend, and sometimes even synthesize text that is more human-like, in response to a variety of prompts and questions.

What makes Solar 10.7B special?

Solar 10.7B distinguishes because of its effective training approach called depth up-scaling. This technique involves the integration of two existing models and optimizing them significantly to produce the best results. The outcome? Solar 10.7B is excellent in multiple NLP activities including translation, creating various forms of creative content, and providing informative answers to your questions.

However, there is a variant of it known as the Solar 10.7B-Instruct, which is a further improved version of the original model that is trained specifically to follow instructions. Thus, if you ask it to write a poem about a robot cat, it will do its best to create as literary and unique a poem as possible to meet the requirements of the given task.

Why is Solar 10.7B interesting?

LLMs like Solar 10.7B can be viewed to have the potential of changing how we interact with computers. They can be used for:

More natural and engaging chatbots: Imagine yourself talking to a virtual assistant that can appreciate your intentions, directions, or suggestions and answer you, as a helpful informative source.

Enhanced machine translation: Building bridges by removing language barriers and ensuring effective and smooth communication between individuals hailing from different cultures.

Content creation assistance: Solar 10.7B is useful to writers if they are stuck on what to write next or marketing executives and their teams seeking inspiration for the next marketing campaign.

Solar 10.7B is currently in beta, but this example gives us an idea of the potential of LLMs. Still, as these models progress and become an integral part of our daily lives, they have the capability to revolutionize our work, education, and interactions with machines.

Architecture of Solar 10.7B

Neural Network Architecture

With regard to the Solar LLM 10, three main points can be identified at its core. 7B there resides a complex neural structure that supports all its features. In terms of the architecture of the model, it is created with an emphasis on deep up-scaling, a technique that entails increasing the depth of the model through the addition of several layers. This kind of strategic improvement helps the model not just grow in terms of size but also achieve better cognitive and processing power, enhancing its performance overall.

Core Components

1. Depth Up-Scaling

Solar 10.7B architecture incorporates the Depth Up-Scaling method, which is different from other scaling techniques as it only increases the depth of the model but is free from adding operational difficulties. This technique enables customers to get the Solar 10.7B to offer high performances without claiming additional resources, which in turn makes it an effective and efficient material.

2. Integrated Weights

The model is based on weights from the Mistral 7B model, in order to correct it and make it more stable and flexible. This integration of weights makes the model capable of providing optimal solutions for numerous tasks and benchmarks, thus making its performance more stable and robust.

Design Choices

1. Efficiency and Performance

Solar LLM 10.7B is intended to offer optimal performance in a limited format; its goal is to be functional and effective. This choice allows the model to perform well on tasks that involve high language processing abilities while at the same time keeping the computation cost relatively low.

2. Innovation

This architecture of the model represents a new generation of models and leverages concepts like Depth Up-Scaling to improve the model’s performance. By questioning traditional scaling approaches and placing importance on efficiency, Solar 10.7B takes large language models to the next level and is a parameter-efficient approach.

Solar 10.7B is a unique model in the field of AI because its architecture merges imagination, optimization, and effectiveness. These factors include its structural aspects, neural network design, and key design decisions, which enabled it to become one of the leading models in the field of large language models.

Training Methods for Solar 10.7B and Leading LLMs

The types of training and their application that has been incorporated in the development of Solar 10. are quite remarkable and differentiate it from other top-performing LLMs.

Depth Up-Scaling (DUS) Methodology

At the core of Solar LLM 10.7B's training is a novel technique called Depth Up-Scaling (DUS). This approach consists of architectural changes and more pre-training to increase the depth of the model but not the number of parameters.

The DUS method combines weights extracted from the Mistral 7B model with the upscaled layers from the Solar 10.7B and it then continues pre-training the entire model. This extended pre-training alongside the integration of existing model weights is beneficial for Solar 10.7B to deliver higher performance than existing conventional designs without consuming more resources.

The following code provides a basic example of how depth up-scaling can be implemented: The given piece of code defines a function that takes in a base model and a scale factor as the two parameters. The function adds more layers to the base model by replicating a certain number of layers and then concatenating them onto the original model. Finally, it fine-tunes the upscaled model to completion.

def depth_up_scaling(base_model, scale_factor):

# Increase the depth of the base model by scale_factor

new_layers = []

for layer in base_model.layers[-scale_factor:]:

new_layers.append(Copy(layer)) # Create copies of the layers to be upscaled

# Concatenate the base model with the upscaled layers

upscaled_model= tf.keras.models.Sequential(base_model.layers[:-scale_factor]+ new_layers)

# Fine-tune the upscaled model

upscaled_model.compile(loss='categorical_crossentropy', optimizer='adam')

upscaled_model.fit(X_train, y_train, epochs=5)

return upscaled_modelInstruction Fine-Tuning

Apart from the DUS training, Solar 10.7B goes through a very detailed instruction fine-tuning process. This includes supervised fine-tuning (SFT) and direct preference optimization (DPO), using a variety of datasets such as c-s-ale/alpaca-gpt4-data, Open-Orca/OpenOrca, in-house data, Intel/orca_dpo_pairs, and allenai/ultrafeedback_binarized_cleaned. This kind of fine-tuning approach that is multi-faceted is beneficial to Solar 10. 7B to follow instructions and become highly useful in different NLP tasks such as question answering, elaborating contextually appropriate responses, and even programming.

Comparison to Other LLMs

Unlike many other LLMs that need to scale up using MoE architectures, Solar 10.7B offers a specific approach, which is more effective and less complex. MoE models can be computationally expensive in training and deployment, while the Solar 10.7B’s DUS technique has a high performance without acquiring operational complexity as seen in other techniques.

Additionally, Solar LLM 10. For instance, the proposed instruction fine-tuning approach using both SFT and DPO helps distinguish 7B from models that may only use supervised fine-tuning. Such an approach provides a more thorough understanding of the system, as it encompasses all of the layers of Solar 10. To gain enhanced and more elaborate language comprehension, 7B must be created to be more diverse.

In all, the training techniques used in fostering Solar 10. These include the DUS approach and the detailed instruction fine-tuning processes, which have helped the institution achieve high performance and become a leading LLM.

Performance Metrics for Benchmarking LLMs

To evaluate the effectiveness of the large language models such as Solar 10.7B against other prominent models, numerous measurements are used to assess their performance with regard to various tasks. Here are the key performance metrics commonly used for benchmarking LLMs.

1. Perplexity

Perplexity is one of the most standard ways in which the performance of a language model can be assessed. It reflects a degree of how the particular model can accurately predict the given sample of text. The perplexity values are lower when the model has a good ability to find out the next word in a sequence in the text. Solar 10.7B’s perplexity score is an essential measure that helps determine how well its LLM performs in comparison to other models.

In the following code snippet, the puzzle can be solved to get the perplexity of a language model. It also creates a function that will take the model and the test data as the inputs. The function evaluates the results of the model by calculating the probability of each word in the test data. Then, it estimates the probability of the test data and uses it to compute the perplexity measure, which represents the ability of the language model to predict the next word in the sequence.

import numpy as np

def perplexity(model, X_test):

# Calculate the probability of each word in the test data

probs = model.predict(X_test)

# Clip probabilities to avoid numerical instability

probs = np.clip(probs, 1e-10, 1 - 1e-10)

# Calculate the log-likelihood of the test data

log_likelihood = np.sum(np.log(probs) * X_test)

# Calculate the perplexity

perplexity = np.exp(-log_likelihood / len(X_test))

return perplexity2. Accuracy

Accuracy is another important metric that defines the precision of the model, that is, how accurate the predictions of a model are. In the case of LLMs, accuracy is mostly measured with respect to its ability or effectiveness of responding to questions, writing text, or performing certain tasks. Solar 10.7B ability to perform on tasks such as QA, code generation, and logical reasoning is an essential aspect that defines its overall performance and positioning with other models.

3. Throughput

Throughputs simply means the amount of work that can be done in any given time or how often the model can produce results. It is important as a measure of efficiency and the general speed of the LLM program. Higher values for the throughput mean that the model can process larger amounts of data and more tasks within a shorter time. Solar 10.7B’s throughput performance is of paramount significance for the use cases that demand real-time or high speed of the language functionality.

Scaling Challenges and Computational Requirements for Solar 10.7B

The SOLAR-10.7B model faces several key scaling challenges and computational requirements:

- Computational Demanding: The SOLAR-10.7B, model is very computationally intensive, and for this reason, it cannot be easily accessible to the average user or organization that may not have enough computational power. Training and inferring in the model is computationally intensive and demands a significant amount of electricity and good hardware devices.

- Depth Up-Scaling Limitations: The Depth Up-Scaling (DUS) technique applied to scale the SOLAR model has limitations. The researchers had to strip 8 layers from the base model given working with hardware constraints and the researchers could not adequately investigate the best hyperparameters for the DUS process. Further experiments have to be conducted with increased sample size in order to find the optimal number of layers.

- Environmental Concerns: One of the drawbacks is the large energy demands necessary for training as well as for running the SOLAR-10. Critics debate on the effects of the 7B model on the environment. The use and the need for large language models are rising rapidly, and so should the concerns about how their development and usage affect the environment.

- Infrastructure Requirements: The deployment and utilization of the SOLAR-10.7B model also has greater need for computational resources, such as GPUs or TPUs, memory, and high-speed interconnect. This restricts the usage of the model to small-scale enterprises and businesses, as well as anyone who may require the assistance of one.

The SOLAR-10.7B model has the complexity, slows Down High-Proposal of Depth Up-Scaling, environmental constraints, and infra-structural necessities thereby becoming the major difficulty for scaling and popularization. It will be very important for future works to unlock the full potentials of large language models like the proposed SOLAR-10 through reduction of its current limitations through improved research, computational, and enhanced training methodologies.

Comparison of Solar 10.7B with GPT-3 and Other LLMs

The SOLAR-10.7B model demonstrates several key differences and advantages compared to GPT-3 and other leading large language models:

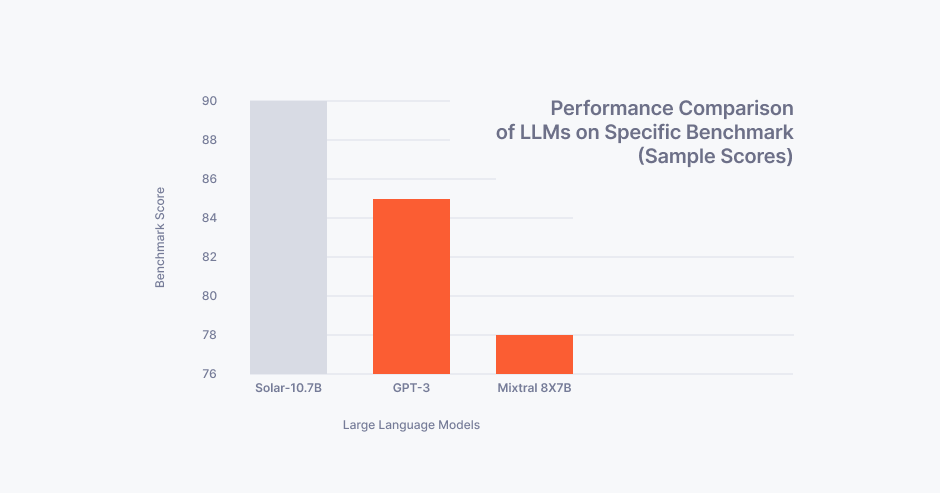

Performance

Solar LLM 10.7B surpasses models of up to 30 billion parameters, including the recent one called Mixtral 8X7B, in a variety of tasks, including ARC, Hellaswag, MMLU, TruthfulQA, and Winogrande. The metric, called H6 average score, which is calculated as an average across all these tasks, is generally higher than in other models of equal complexity.

Scalability

SOLAR 10.7B was trained using a recently proposed technique, which is known as “Depth Up-Scaling” (DUS), which helps improve the scalability of language models by avoiding the use of mixture-of-experts. This makes SOLAR 10.7B more flexible and can be implemented at any organization unlike models such as Falcon that need lots of computational power.

Instruction-Following

SOLAR 10.7B- Instruct, a specific version that specializes in response to specific instructions, surpasses a number of key benchmarks even larger like Mixtral 8X7B-Instruct. This demonstrates SOLAR-10. 7B’s solid skills in comprehending and performing tasks according to detailed guidelines.

Use Cases

One of the benefits of Solar LLM 10 is that it provides improved performance and scalability over the previous software version. 7B makes it ideal for such natural language processing tasks as language understanding, question answering, text or speech generation, and so on. It has become a renowned library that is available for use under the Apache 2. Another advantage that was attributed to 0 licence is that it facilitates easier use in commercial products and services.

SOLAR-10. 7B is a leap in the large language models’ development providing better results in terms of comparable performance, increased entity scale, and enhanced Turing test results than GPT-3 or any other competitor. Because of these points it is an efficient instrument for studying and SpaceBs practical usage in real life situations.

Fine-Tuning and Customization in Solar 10.7B

The SOLAR-10.7B model offers significant advantages when it comes to fine-tuning and customization for specific applications:

Fine-Tuning Capabilities

The SOLAR-10.7B model which serves as a reliable model for improvement and development. The researchers have also used the SOLAR-10 to show that simple instruction fine-tuning improves the model. In the learning process of various models derived from the 7B pre-trained model, one can expect noteworthy performance enhancements.

Specialized Variants

Expanding from the SOLAR-10.7B base model, the researchers have introduced a new model for the research that is known as SOLAR-10.7B-Instruct, which is pre-trained and then further trained with a focus on adhering to intricate instructions. This variant demonstrates the improvement in performance on corresponding benchmarks as compared to other similar models.

Adaptability

The SOLAR-10.7B model is fine-tuned and highly scalable, which suggests it can be fine-tuned more easily across various areas such as personalized education and improved customer support as well as being used to create content autonomously. The concept is capable of being adjusted to suit the fundamental needs that it embodies.

Comparison to Other LLMs

Amidst the top large language models such as GPT 3, Mixtral 8X7B, SOLAR is exceptionally efficient and robust. Compared to other models with a similar number of parameters, 7B performs well and outperforms competitors in terms of accuracy across numerous datasets. This means that the Depth Up-Scaling (DUS) solution utilized in SOLAR-10 is effective when removing noise from images. More specifically, 7B is better than other scaling techniques in terms of allowing for more fine-tuning as well as better outcome optimization.

The SOLAR-10.7B model’s ability to fine-tune specific versions, and flexibility allows it to be a fitting model for organizations and researchers wanting large language models optimized for their purposes. This enhanced performance over other LLMs of comparable or larger sizes demonstrates the possibility of this Depth Up-Scaling approach in effective model scaling and adaptations.

Data Sources and Preprocessing for Training Solar 10.7B

Solar LLM - 10.7B 10 was trained with data from various sources and the data was preprocessed in certain ways.

Data Sources

The training data for SOLAR-10.7B has this split of the data: the data that is available for public use and the data that is only available to the organization. The real and unshared datasets can be replaced with open-access data that are comparable to MetaMathQA Yu. These elements enable the model to work well within different natural language processing tasks among the mentioned ones.

Preprocessing Techniques

It also discusses Data-verse for data preprocessing, which should entail cleaning the format, or structuring of the training data in order to prepare the data to train the large language model. Data preparation cannot be underestimated in the process of modeling since it contributes significantly to achieving accurate results.

Data Augmentation Strategies

Although specific details of data augmentation strategy based on preceding references are not mentioned, data augmentation steps are generally executed to enrich the available samples in the training data set. Some of these techniques can be like back-translation, adding noise to the input data and generating synthetic data and the like for the purpose of overall enhancement for the model.

The training of SOLAR-10.7B can be optimized with additional strategies for gathering various types of data, fine-tuning data preprocessing with Data-verse tools, and investigating data augmentation methods that can be used to improve the model’s robustness and efficacy in a broad spectrum of NLP tasks.

Use Cases for Solar 10.7B in Industry Applications

Solar 10.7B, a large language model with 10.7 billion parameters, has found successful implementation in various industry applications due to its unique strengths. Here are some key real-world applications where Solar LLM 10.7B has excelled:

Instruction-Following Tasks

Solar 10.7B-Instruct, a specific fine-tuning model for more focused instruction-completion capabilities, quickly established itself as an adept follower of instructions and surpassed the performance of bigger and more sophisticated variants. This makes it a valuable tool for tasks that involve comprehension of written and spoken words in addition to comprehension of instructions in the workplace.

Fine-Tuning Applications

Solar 10.7B is highly diverse and scalable, it can easily be used in many different fine-tuning tasks. This capacity of the program, coupled with its clear focus on information processing with roots in the Llama2 system, makes it a useful tool in language processing as it can work quickly and accurately.

Efficiency and Effectiveness

Solar 10.7B is aimed at questioning the belief that the greater amount of resources and model complexity would result in better performance of AI models. In one aspect, Solar 10 demonstrated high efficiency and effectiveness in achieving the goals set and delivering the services required. 7B represents a new level of possibilities that can be offered by Large Language Models (LLMs) and thus, it seems to be quite universal and reliable in multiple tasks.

Data Contamination Testing

Solar 10.7B-Instruct is subjected to a series of data contamination tests to ensure the model is trained with no benchmark contamination datasets. This method ensures that the data generated by the model is accurate and free from manipulation, making it ideal for applications that demand accuracy and integrity of results.

Versatility and Robustness

Solar 10.7B is a very comprehensive training tool, which does not require extensive, expensive adaptations needed by many other sophisticated models, making it a very flexible and effective instrument in many contexts. This makes Solar 10.7B not only identifies as a high-performance brand but also as a commercial one relevant to the broad range of industries.

Its major advantages include accuracy in instruction-following tasks, flexibility toward fine-tuning, speed, productivity, dependability from tested data, and applicability within numerous industries. These qualities make it a worthy tool when it comes to natural language processing and AI opportunities.

Resource Efficiency and Cost Analysis for Solar 10.7B

Solar LLM 10.7B, from Upstage, presents a new technique known as depth up-scaling (DUS) for efficient scaling of LLMs. This model, with 10. These are quite extensive, with 7 billion parameters, and the model proves to be highly effective across different NLP tasks. Solar 10’s breakthrough is a direct result of this paradigm shift. 7B is the depth up-scaling technique, which enables the model to reach the necessary level of accuracy without the need for high computational resources. This makes it easier to scale up the model to larger sizes, which is a more efficient feat compared to other big models such as Qwen 14B and Mistral 7B that require many resources.

On the aspect of cost, the Solar Mini which is the simplified model of Solar is an ideal indication that small models can perform exceptionally well by minimizing the computation time and increasing the response and efficiency of the model. Incorporated from the 32-layer Llama2 architecture, Solar Mini applies the depth up-scaling technique, which contains the depth- specific scaling and the continuous pre- training, and thus the scaling is easier and faster than the other methods. This approach also enables Solar Mini to continue being portable while being far superior to competitors in most standards.

Further, Solar Mini integrated with BentoML, an AI inference platform, focuses on cost effectiveness for execution speed and remains appealing for all users who want both speed and low costs. BentoML is quite versatile, as it allows users to set up workflows with different frameworks with ease and is compatible with Scikit-Learn, PyTorch, and TensorFlow. Solar LLM 10.7B and the smaller version of the structure, Solar Mini, demonstrate that scaling can be done effectively, through techniques such as depth up-scaling, to improve the performance of the solar panels and make the structure more cost effective. The use of platforms such Solar models in BentoML goes a notch higher to increase their cost effectiveness and applicability in actual deployment with various ML frameworks among the available models.

Model Interpretability and Explainability in Solar 10.7B

Interpretability and explainability of the model in Solar 10.7B is implemented through various techniques to enhance its output, in order to be more understandable to the users and developers.

Solar LLM 10.7B also employs a technique known as depth up-scaling, whereby the model can be scaled in a very efficient manner without necessarily requiring high computational resources. This method involves scaling the model as well as pre-training it in a continuous manner; it also reduces the parameters of the model and the overall performance in natural language processing tasks is enhanced. By implementing depth up-scaling, Solar 10.7B makes sure that it produces interpretable and explainable output even though it is a large-scale model.

Moreover, Solar Mini, which is the simplified version of Solar, helps in easing the interpretability by using other components such as Instruction Tuning and Alignment Tuning. Instruction tuning is a process that focuses on training the model to pay attention to the instructions given and use them accurately, for instance in fulfilling a Korean language request. Additional to the Alignment Tuning, the procedure fine-tunes the model to deliver the answers favored by humans or utilized by more effective base language models, which increases the relevance and credibility of its responses.

In addition, the convenience of using Solar Mini with RAG systems and other components including the Layout Analyzer makes it more interpretable. RAG systems enable the model to make good use of prior learning in a proper manner while on the other hand, the Layout Analyzer is responsible for converting complicated document formats to a format that can easily be understood by the model, this makes it easier for the model to extract information that is relevant, and to pass on the same to the end user in a format that can easily be understood.

Solar 10.7B and its derivative Solar Mini use depth up-scaling, the tuning of instructions, the tuning of alignment, and RAG system and special components to improve model readability and interpretability, thus making the results more comprehensible to the users and developers.

Handling Bias and Ethical Considerations in Solar 10.7B

Solar 10.7B has made a concerted effort to address bias and ethical considerations in its development and deployment:

Mitigating Bias:

- The training data for Solar 10.7B was carefully curated to minimize data contamination and biases, a critical concern in AI development. This rigorous data handling and processing protocol helps ensure the reliability and integrity of the model's outputs.

- Ablation studies were conducted to gain deeper insights into the model's functioning and the impact of different components, allowing the team to fine-tune the model and enhance its efficiency and effectiveness.

- In comparative tests, Solar 10.7B consistently outperformed other models of similar size, highlighting its superior design and training methodology that helps mitigate biases.

Ensuring Ethical Use:

- The development of Solar 10.7B was guided by a strong commitment to maintaining high ethical standards, evident in the careful selection of training datasets and the alignment tuning phase.

- This ethical alignment is not just a theoretical concern but a practical necessity, as AI models increasingly interact with humans in various capacities. Solar 10.7B's training methodology balances technical proficiency with ethical responsibility.

- In contrast to other advanced models that often require complex, resource-intensive modifications, Solar 10.7B's efficiency and ethical considerations make it a more practical choice for a wide array of applications.

- The team recognizes the potential risks of these AI tools being used for harmful purposes, such as spreading misinformation or biased content. They emphasize the importance of transparent and responsible use of these technologies to increase public welfare.

Overall, Solar LLM 10.7B's approach to bias mitigation and ethical considerations sets it apart from other LLMs. By prioritizing data integrity, model interpretability, and ethical alignment, Solar 10.7B aims to deliver high-performing and socially responsible AI solutions.

Security and Privacy Measures for Solar 10.7B

Security and privacy measures for Solar 10.7B are crucial aspects that have been carefully addressed in its development and deployment, as highlighted in the provided sources:

- Data Contamination and Bias Mitigation: Solar 10.7B's training data was meticulously curated to minimize data contamination and biases, ensuring the reliability and integrity of the model's outputs. This rigorous data handling process is essential for maintaining security and privacy by preventing the incorporation of biased or harmful information into the model.

- Ethical Considerations: The team behind Solar 10.7B emphasized the importance of upholding high ethical standards in AI development, evident in the model's training and operation. The ethical alignment of Solar 10.7B was a key focus, ensuring that the model's outputs adhere to ethical norms and societal values, thus safeguarding against potential ethical breaches.

- Privacy Policy: Solar 10.7B's development includes privacy considerations, as indicated by the presence of a privacy policy associated with the model. This demonstrates a commitment to protecting user data and ensuring that privacy concerns are addressed in the model's implementation.

- Comparative Analysis: In comparative tests, Solar 10.7B consistently outperformed models of similar size, showcasing its superior design and training methodology, which indirectly reflects the robustness of its security and privacy measures.

- Environmental Impact: While not directly related to security and privacy, the environmental concern associated with the energy consumption of large-scale computing resources for training and inferencing the model is highlighted. This environmental impact consideration is essential in the context of sustainability and responsible AI development.

In comparison to other Large Language Models (LLMs), Solar LLM 10.7B's focus on data integrity, ethical alignment, and privacy considerations sets it apart as a model that not only excels in performance but also prioritizes security and privacy. By addressing potential biases, upholding ethical standards, and implementing privacy policies, Solar 10.7B demonstrates a comprehensive approach to security and privacy that enhances its trustworthiness and reliability in various applications.

Comparative Analysis of Solar 10.7B's Transformer Design

To provide a comparative analysis of Solar 10.7B's transformer design and highlight its unique aspects compared to other models, we can draw insights from the sources.

Solar 10.7B, a large language model with 10.7 billion parameters, showcases exceptional performance in various natural language processing tasks, setting it apart from other models like Mixtral 8X7B and Mistral 7B. The transformer architecture of Solar 10.7B, based on the Llama2 architecture, offers a blend of speed and accuracy, making it a powerful tool in language processing. This architecture plays a crucial role in the model's efficiency and effectiveness in handling complex language tasks.

One distinctive feature of Solar LLM 10.7B's transformer design is the innovative Depth Up-Scaling (DUS) technique it employs. DUS strategically enhances the model's depth by inserting additional hidden layers, enabling improved performance on tasks requiring extensive contextual understanding and the handling of complex representations. This approach excels in capturing long-range dependencies and enhancing the model's ability to process information effectively.

In contrast to other scaling techniques like Mixture of Experts (MOE), which dynamically allocate "sub-experts" for different inputs to enhance efficiency and robustness, Solar 10.7B's DUS method focuses on enhancing model depth for improved performance on a wide range of tasks. While MOE may excel in resource utilization and noise resilience, DUS stands out for its ability to handle complex contextual dependencies and representations effectively.

Overall, Solar 10.7B's transformer design, particularly its use of the Depth Up-Scaling technique, sets it apart from other models by offering a unique approach to enhancing model depth and performance in natural language processing tasks. This innovative architecture, combined with the model's fine-tuning strategies and integration with Mistral 7B weights, contributes to its exceptional performance and efficiency in handling diverse language tasks.

Performance Optimization Techniques in Solar 10.7B

Solar 10.7B, a large language model with 10.7 billion parameters, employs several performance optimization techniques to achieve exceptional results in natural language processing tasks. These techniques involve both hardware and software-level improvements, which are discussed below:

Hardware-Level Improvements

- Depth Up-Scaling (DUS): Solar 10.7B's core innovation is the DUS method, which allows the model to scale up efficiently without requiring a significant increase in computational resources. This approach involves adding depth-specific scaling and continuous pre-training, making the model more parameter-efficient while achieving superior performance.

- Compact Architecture: Solar 10.7B's compact architecture, based on the Llama-2 architecture, is designed to be efficient in terms of computational resources. This compactness enables the model to perform well without necessitating a proportional increase in resource consumption, making it a practical choice for applications where computational efficiency is crucial.

Software-Level Improvements

- Instruction Fine-Tuning: Solar 10.7B-Instruct, a variant of the model, is fine-tuned for instruction-following capabilities. This fine-tuning process enhances the model's ability to follow complex instructions, outperforming larger and more complex models like Mixtral 8x7B with MoE.

- Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO): The model utilizes state-of-the-art instruction fine-tuning methods, including SFT and DPO. These methods involve supervised training on specific datasets and direct optimization of the model for specific tasks, resulting in significant performance improvements.

- Pre-Training and Continued Pre-Training: Solar 10.7B's training methodology involves a two-stage process, encompassing both instruction tuning and alignment tuning. This approach allows the model to learn from a wide range of tasks and adapt to new tasks more effectively.

- Quantization and Model Pruning: The model's weights are quantized to reduce the precision of the model's parameters, which can significantly reduce the memory requirements and computational costs of the model. Additionally, model pruning techniques can be applied to remove redundant or less important parameters, further enhancing the model's efficiency.

Solar LLM 10.7B's performance optimization techniques involve a combination of hardware-level improvements like Depth Up-Scaling and compact architecture, as well as software-level improvements such as instruction fine-tuning, supervised fine-tuning, direct preference optimization, pre-training, and quantization. These techniques collectively enable the model to achieve exceptional performance in natural language processing tasks while maintaining efficiency in terms of computational resources.

Integration of Solar 10.7B with Other AI Technologies

To integrate Solar 10.7B with other AI technologies like computer vision and speech recognition, several approaches can be considered based on the information from the provided sources:

- Fine-Tuning for Specific Tasks: Solar 10.7B can be fine-tuned for tasks that involve computer vision or speech recognition. By adapting the model's parameters to these specific domains, Solar 10.7B can enhance its performance in tasks related to image processing or speech analysis.

- Collaborative Models: Solar 10.7B can be integrated into collaborative models that combine its strengths in natural language processing with the capabilities of computer vision or speech recognition models. This integration can lead to more comprehensive AI systems that excel in multiple domains.

- API Integration: Solar 10.7B's outputs can be integrated into existing AI systems through APIs, allowing for seamless communication between Solar 10.7B and other AI technologies. This integration can facilitate the exchange of information and insights between different AI models, enhancing the overall performance of AI applications.

- Multi-Modal Learning: By incorporating multi-modal learning techniques, Solar 10.7B can learn from multiple data sources, including text, images, and audio. This approach enables the model to process information from different modalities simultaneously, enhancing its ability to understand and generate content across various domains.

- Transfer Learning: Leveraging transfer learning techniques, Solar 10.7B can transfer knowledge gained from natural language processing tasks to computer vision or speech recognition tasks. This transfer of knowledge can expedite the learning process in new domains and improve the model's performance in diverse AI applications.

The integration of Solar LLM 10.7B with other AI technologies like computer vision and speech recognition can be achieved through fine-tuning for specific tasks, collaborative models, API integration, multi-modal learning, and transfer learning techniques. These integration strategies can enhance the versatility and performance of Solar 10.7B in various AI applications that require a combination of natural language processing, image analysis, and speech understanding capabilities.

Solar 10.7B in Multimodal Learning Applications

To analyze the use of Solar 10.7B in multimodal learning applications involving text, images, and audio, we can draw insights from the sources:

Multimodal Learning with Solar 10.7B

- Text-based Applications: Solar LLM 10.7B's core strength lies in natural language processing, making it well-suited for text-based applications. The model's innovative Depth Up-Scaling (DUS) technique allows it to excel in understanding complex language and contextual relationships. This capability can be leveraged in tasks such as question-answering, summarization, and language generation.

- Image-based Applications: While Solar 10.7B is primarily a language model, its architecture can be extended to incorporate multimodal learning. By integrating Solar 10.7B with computer vision models, the combined system can tackle tasks that involve both text and images, such as image captioning, visual question-answering, and multimodal content generation.

- Audio-based Applications: To enable Solar 10.7B to process audio data, the model can be integrated with speech recognition models. This integration would allow the system to understand and respond to spoken language, opening up applications in voice-based assistants, audio transcription, and multimodal dialogue systems.

Advantages of Solar 10.7B in Multimodal Learning

- Efficient Scaling: Solar 10.7B's DUS technique enables efficient scaling of the model, allowing it to handle the increased complexity and computational requirements of multimodal learning without a significant increase in resource consumption. This makes Solar 10.7B a practical choice for real-world multimodal applications.

- Ethical Alignment: The training process of Solar 10.7B, which includes alignment tuning, ensures that the model's outputs are aligned with ethical standards and societal values. This is crucial in multimodal applications, where the model's responses may involve sensitive or impactful content across different modalities.

- Interpretability and Explainability: Solar 10.7B's design emphasizes interpretability and explainability, making it easier for users and developers to understand the model's decision-making process and outputs. This transparency is essential in multimodal applications, where the model's reasoning and recommendations need to be communicated.

- Versatility and Adaptability: Solar 10.7B's versatile architecture and training methodology allow it to be fine-tuned and adapted to various multimodal tasks, leveraging its strong foundation in natural language processing and the ability to integrate with other specialized models.

Solar 10.7B's efficient scaling, ethical alignment, interpretability, and versatility make it a promising candidate for multimodal learning applications involving text, images, and audio. By integrating Solar 10.7B with appropriate computer vision and speech recognition models, developers can create powerful multimodal systems that can handle complex, real-world tasks while maintaining high standards of performance and responsibility.

Comparative Latency and Throughput Analysis for Solar 10.7B

Latency and Throughput Analysis

- Benchmark Performance: A benchmarked performance comparison between Solar 10.7B and Mistral 7B showcased improvements with Solar 10.7B, indicating superior performance in various tests. This suggests that Solar 10.7B may exhibit lower latency and higher throughput compared to Mistral 7B in real-time applications.

- API Integration: Solar 10.7B's outputs can be integrated into existing AI systems through APIs, enabling seamless communication and interaction with other AI technologies like computer vision and speech recognition models. This integration can impact the latency and throughput of the overall system, depending on the efficiency of data exchange and processing.

- Efficient Scaling: Solar 10.7B's Depth Up-Scaling (DUS) technique allows for efficient scaling of the model without significantly increasing computational resources, potentially leading to improved latency and throughput in real-time applications.

Real-Time Use Cases

- Chatbots and Conversational AI: In applications like chatbots and conversational AI, low latency and high throughput are essential for providing quick and accurate responses to user queries. Solar 10.7B's performance improvements over Mistral 7B in benchmarks suggest its suitability for real-time conversational applications.

- Financial Document Analysis: Real-time financial document analysis requires efficient processing to extract insights quickly. Solar 10.7B's capabilities in latency and throughput, as highlighted in benchmarks and ablation studies, make it a viable option for applications involving financial data analysis and investment strategies.

- Multimodal Learning: Integrating Solar 10.7B with computer vision and speech recognition models for multimodal learning applications can benefit from its potential for low latency and high throughput. This integration can enhance the speed and efficiency of processing multimodal data in real-time scenarios.

Solar 10.7B's performance improvements over Mistral 7B, efficient scaling techniques, and integration capabilities with other AI technologies position it as a promising model for real-time applications requiring low latency and high throughput. By leveraging its strengths in natural language processing and efficient scaling methods, Solar 10.7B can excel in various real-time use cases, including chatbots, financial document analysis, and multimodal learning applications.

Advanced NLP Applications Using Solar 10.7B

Solar 10.7B, a large language model with 10.7 billion parameters, demonstrates exceptional performance in a variety of advanced natural language processing (NLP) applications:

Sentiment Analysis

Solar 10.7B's strong performance in natural language understanding tasks makes it well-suited for sentiment analysis applications. By fine-tuning the model on sentiment-labeled datasets, it can be leveraged to accurately detect and classify the sentiment expressed in text, such as customer reviews, social media posts, or other textual data. This capability can be valuable for applications like customer experience management, brand monitoring, and opinion mining.

Language Translation

The depth up-scaling (DUS) technique used in Solar 10.7B allows the model to efficiently scale up in size and complexity, enabling it to handle the nuances and contextual understanding required for high-quality language translation. By integrating Solar 10.7B with specialized translation components, developers can create robust multilingual applications that can translate text between a wide range of languages accurately and efficiently.

Chatbot Development

Solar LLM 10.7B's strong performance in instruction-following and natural language generation tasks makes it an excellent foundation for building advanced chatbot applications. By fine-tuning the model on conversational data and integrating it with other components like dialogue management and natural language understanding, developers can create highly engaging and intelligent chatbots that can handle complex user interactions across various domains.

Additionally, Solar 10.7B's compact architecture and efficient scaling capabilities make it a practical choice for deploying these advanced NLP applications in real-world scenarios, where computational resources and latency requirements are critical factors.

Overall, the versatility and performance of Solar 10.7B position it as a powerful tool for developing a wide range of advanced NLP applications, from sentiment analysis and language translation to sophisticated chatbot systems, leveraging its strong foundation in natural language processing.

Future Directions and Innovation in Solar 10.7B

As Solar 10.7B demonstrates impressive capabilities, several potential future directions and innovations could further advance this large language model:

Scaling and Efficiency Improvements

The core innovation in Solar 10.7B is the Depth Up-Scaling (DUS) technique, which allows the model to scale efficiently without a significant increase in computational resources. Future iterations of Solar 10.7B could explore ways to further enhance this scaling approach, potentially by incorporating additional techniques like Mixture of Experts (MoE) or other novel architectural designs. Improving the efficiency and scalability of Solar 10.7B will be crucial for deploying it in real-world applications with diverse computational requirements.

Multimodal Integration

While Solar 10.7B is primarily focused on natural language processing, there is potential to integrate it with other AI technologies, such as computer vision and speech recognition. By developing multimodal capabilities, future versions of Solar 10.7B could handle a broader range of inputs and outputs, enabling more comprehensive and intelligent systems. This integration could involve fine-tuning the model on multimodal datasets or leveraging transfer learning techniques to leverage its strong language understanding in other domains.

Specialized Variants and Fine-Tuning

The introduction of Solar 10.7B-Instruct, a variant fine-tuned for instruction-following tasks, demonstrates the potential for creating specialized versions of the model4. Future developments could explore fine-tuning Solar 10.7B for other specific applications, such as sentiment analysis, language translation, or task-oriented dialogue. These specialized variants could further enhance the model's performance and versatility in targeted use cases.

Ethical and Responsible AI Advancements

Solar 10.7B's emphasis on ethical alignment and responsible development sets a positive precedent. Future iterations of the model could explore even more advanced techniques for ensuring ethical and trustworthy AI, such as incorporating explicit ethical reasoning capabilities, transparency mechanisms, or advanced safety measures to mitigate potential misuse.

Continued Research and Collaboration

As the field of large language models continues to evolve rapidly, the team behind Solar 10.7B could engage in further research, collaborations, and knowledge-sharing with the broader AI community. This could lead to the development of next-generation LLMs that build upon the innovations and lessons learned from Solar 10.7B, pushing the boundaries of what is possible in natural language processing and beyond.

By focusing on scaling and efficiency improvements, multimodal integration, specialized variants, ethical advancements, and continued research, future iterations of Solar 10.7B have the potential to drive significant progress in the field of large language models and their real-world applications.

Comparing Solar 10.7B with State-of-the-Art LLMs

Here is the comparison of Solar 10.7B with other state-of-the-art Large Language Models (LLMs)

Solar 10.7B

- Strengths: Depth Up-Scaling (DUS)

State-of-the-Art LLMs

- Strengths: Resource-Intensive Models

Comparison

- Efficiency vs. Resource Intensity: Solar 10.7B's strength lies in its efficiency and performance, achieved through the DUS technique, which contrasts with resource-intensive models like Mistral 7B.

- Adaptability and Specialization: Solar 10.7B's variant for instruction-following tasks highlights its adaptability and specialization, potentially offering advantages in specific applications compared to other LLMs.

- Performance and Practicality: Solar 10.7B's balance of performance and efficiency positions it as a practical choice for a wide range of NLP tasks, distinguishing it from models with specialized but resource-intensive capabilities.

Read more: Examining the Leading LLM Models: Top Programs and OWASP Risks

In a Nutshell

Solar 10.7B stands out for its efficiency, performance, and adaptability, particularly through the DUS technique and specialized variants. While other state-of-the-art LLMs may excel in specific domains or tasks, Solar 10.7B's overall balance of efficiency and performance makes it a competitive and practical choice for various natural language processing applications.